Microsoft announced this week that its facial-recognition system is now more accurate in identifying people of color, touting (吹嘘)its progress at tackling one of the technology’s biggest biases (偏见). But critics, citing Microsoft’s work with Immigration and Customs Enforcement, quickly seized on how that improved technology might be used. The agency contracts with Microsoft for cloud-computing tools that the tech giant says is largely limited to office work but can also include face recognition. Columbia University professor Alondra Nelson tweeted, “We must stop confusing ‘inclusion’ in more ‘diverse’ surveillance (监管)systems with justice and equality.” Facial-recognition systems more often misidentify people of color because of a long-running data problem: The massive sets of facial images they train on skew heavily toward white men. A Massachusetts Institute of Technology study this year of the face-recognition systems designed by Microsoft, IBM and the China-based Face++ found that facial-recognition systems consistently giving the wrong gender for famous women of color including Oprah Winfrey, Serena Williams, Michelle Obama and Shirley Chisholm, the first black female member of Congress. The companies have responded in recent months by pouring many more photos into the mix, hoping to train the systems to better tell the differences among more than just white faces. IBM said Wednesday it used 1 million facial images, taken from the photo-sharing site Flickr, to build the “world’s largest facial data-set” which it will release publicly for other companies to use. IBM and Microsoft say that allowed its systems to recognize gender and skin tone with much more precision. Microsoft said its improved system reduced the error rates for darker-skinned men and women by “up to 20 times,” and reduced error rates for all women by nine times. Those improvements were heralded(宣布)by some for taking aim at the prejudices in a rapidly spreading technology, including potentially reducing the kinds of false positives that could lead police officers misidentify a criminal suspect. But others suggested that the technology's increasing accuracy could also make it more marketable. The system should be accurate, “but that’s just the beginning, not the end, of their ethical obligation,” said David Robinson, managing director of the think tank Upturn. At the center of that debate is Microsoft, whose multimillion-dollar contracts with ICE came under fire amid the agency’s separation of migrant parents and children at the Mexican border. In an open letter to Microsoft chief executive Satya Nadella urging the company to cancel that contract, Microsoft workers pointed to a company blog post in January that said Azure Government would help ICE “accelerate recognition and identification.” “We believe that Microsoft must take an ethical stand, and put children and families above profits,” the letter said. A Microsoft spokesman, pointing to a statement last week from Nadella, said the company’s “current cloud engagement” with ICE supports relatively anodyne(温和的)office work such as “mail, calendar, massaging and document management workloads.” The company said in a statement that its facial-recognition improvements are “part of our going work to address the industry-wide and societal issues on bias.” Criticism of face recognition will probably expand as the technology finds its way into more arenas, including airports, stores and schools. The Orlando police department said this week that it would not renew its use of Amazon. com’s Rekognition system. Companies ”have to acknowledge their moral involvement in the downstream use of their technology,” Robinson said. “The impulse is that they’re going to put a product out there and wash their hands of the consequences. That’s unacceptable.”【小题1】What is “one of the technology’s biggest biases” in Paragraph 1? A.Class bias. B.Regional difference. C.Professional prejudice. D.Racial discrimination. A.Justice and equality have been truly achieved. B.It is due to the expansion of the photo database. C.It has already solved all the social issues on biases. D.The separation of immigrant parents from their children can be avoided. A.Data problems. B.The market value. C.The application field. D.A moral issue A.Skeptical. B.Approval. C.Optimistic. D.Neutral. A.companies had better hide from responsibilities B.companies deny problems with its technical process C.companies should not launch new products on impulse D.companies should be responsible for the new product and the consequences A.The wide use of Microsoft system B.Fears of facial-recognition technology C.The improvement of Microsoft system D.Failure of recognizing black women 【小题2】What can we know about the improvement of facial-recognition technology? A.Justice and equality have been truly achieved. B.It is due to the expansion of the photo database. C.It has already solved all the social issues on biases. D.The separation of immigrant parents from their children can be avoided. 【小题3】What is the focus of the face-recognition debate? A.Data problems. B.The market value. C.The application field. D.A moral issue 【小题4】What is David Robinson's attitude towards facial-recognition technology? A.Skeptical. B.Approval. C.Optimistic. D.Neutral. 【小题5】We can infer from the last paragraph that Robinson thinks _____. A.companies had better hide from responsibilities B.companies deny problems with its technical process C.companies should not launch new products on impulse D.companies should be responsible for the new product and the consequences 【小题6】Which can be the suitable title for the passage? A.The wide use of Microsoft system B.Fears of facial-recognition technology C.The improvement of Microsoft system D.Failure of recognizing black women

Microsoft announced this week that its facial-recognition system is now more accurate in identifying people of color, touting (吹嘘)its progress at tackling one of the technology’s biggest biases (偏见).

But critics, citing Microsoft’s work with Immigration and Customs Enforcement, quickly seized on how that improved technology might be used. The agency contracts with Microsoft for cloud-computing tools that the tech giant says is largely limited to office work but can also include face recognition.

Columbia University professor Alondra Nelson tweeted, “We must stop confusing ‘inclusion’ in more ‘diverse’ surveillance (监管)systems with justice and equality.”

Facial-recognition systems more often misidentify people of color because of a long-running data problem: The massive sets of facial images they train on skew heavily toward white men. A Massachusetts Institute of Technology study this year of the face-recognition systems designed by Microsoft, IBM and the China-based Face++ found that facial-recognition systems consistently giving the wrong gender for famous women of color including Oprah Winfrey, Serena Williams, Michelle Obama and Shirley Chisholm, the first black female member of Congress.

The companies have responded in recent months by pouring many more photos into the mix, hoping to train the systems to better tell the differences among more than just white faces. IBM said Wednesday it used 1 million facial images, taken from the photo-sharing site Flickr, to build the “world’s largest facial data-set” which it will release publicly for other companies to use.

IBM and Microsoft say that allowed its systems to recognize gender and skin tone with much more precision. Microsoft said its improved system reduced the error rates for darker-skinned men and women by “up to 20 times,” and reduced error rates for all women by nine times.

Those improvements were heralded(宣布)by some for taking aim at the prejudices in a rapidly spreading technology, including potentially reducing the kinds of false positives that could lead police officers misidentify a criminal suspect.

But others suggested that the technology's increasing accuracy could also make it more marketable. The system should be accurate, “but that’s just the beginning, not the end, of their ethical obligation,” said David Robinson, managing director of the think tank Upturn.

At the center of that debate is Microsoft, whose multimillion-dollar contracts with ICE came under fire amid the agency’s separation of migrant parents and children at the Mexican border.

In an open letter to Microsoft chief executive Satya Nadella urging the company to cancel that contract, Microsoft workers pointed to a company blog post in January that said Azure Government would help ICE “accelerate recognition and identification.” “We believe that Microsoft must take an ethical stand, and put children and families above profits,” the letter said.

A Microsoft spokesman, pointing to a statement last week from Nadella, said the company’s “current cloud engagement” with ICE supports relatively anodyne(温和的)office work such as “mail, calendar, massaging and document management workloads.” The company said in a statement that its facial-recognition improvements are “part of our going work to address the industry-wide and societal issues on bias.”

Criticism of face recognition will probably expand as the technology finds its way into more arenas, including airports, stores and schools. The Orlando police department said this week that it would not renew its use of Amazon. com’s Rekognition system.

Companies ”have to acknowledge their moral involvement in the downstream use of their technology,”

Robinson said. “The impulse is that they’re going to put a product out there and wash their hands of the consequences. That’s unacceptable.”

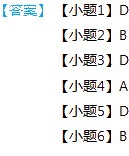

【小题1】What is “one of the technology’s biggest biases” in Paragraph 1?| A.Justice and equality have been truly achieved. |

| B.It is due to the expansion of the photo database. |

| C.It has already solved all the social issues on biases. |

| D.The separation of immigrant parents from their children can be avoided. |

| A.Data problems. | B.The market value. |

| C.The application field. | D.A moral issue |

| A.Skeptical. | B.Approval. |

| C.Optimistic. | D.Neutral. |

| A.companies had better hide from responsibilities |

| B.companies deny problems with its technical process |

| C.companies should not launch new products on impulse |

| D.companies should be responsible for the new product and the consequences |

| A.The wide use of Microsoft system | B.Fears of facial-recognition technology |

| C.The improvement of Microsoft system | D.Failure of recognizing black women |

题目解答

答案

解析

考查要点:本题主要考查学生对科技类议论文的阅读理解能力,需结合文章内容分析面部识别技术的改进、争议焦点及各方态度。

解题核心:

- 定位关键信息:通过问题关键词(如“偏见”“技术改进原因”“道德争议”)快速定位段落。

- 辨析选项差异:注意选项中绝对化表述(如“已解决所有问题”)与原文的隐含态度(如“道德义务”)。

- 推断作者意图:通过细节(如微软与ICE合作)理解技术应用的潜在风险。

小题2

问题:面部识别技术的改进原因是什么?

关键信息:

- 数据问题:原系统训练数据中白人男性占比过高。

- 解决措施:微软、IBM扩大数据库(如IBM使用100万张 Flickr 照片)。

结论:技术改进源于数据库的扩展(选项B)。

小题3

问题:面部识别辩论的焦点是什么?

关键信息:

- 微软与ICE合作引发争议(涉及移民家庭分离)。

- 专家强调技术需兼顾道德义务。

结论:焦点是道德问题(选项D)。

小题4

问题:David Robinson对技术的态度如何?

关键信息:

- Robinson认为技术精确性只是“开始,不是终点”,需承担后果责任。

- 批评公司“推出产品后推卸责任”的行为。

结论:态度为怀疑/批评(选项A)。

小题5

问题:最后一段可推断出什么?

关键信息:

- Robinson强调公司需承认技术下游使用的道德关联。

- 直接对应选项“公司应对产品及后果负责”(选项D)。

小题6

问题:文章的合适标题是什么?

关键信息:

- 技术改进与道德争议并存(如微软宣传进步 vs. 批评用于移民监管)。

- 标题需体现矛盾核心(技术进步与担忧)。

结论:“对面部识别技术的担忧”(选项B)更贴合全文。